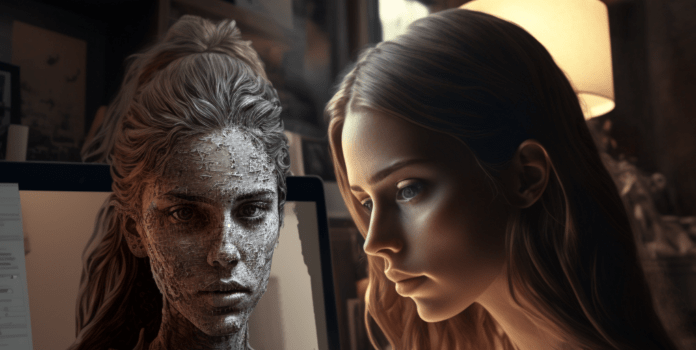

(Dmytro “Henry” Aleksandrov, Headline USA) OpenAI, the company that created the headline-grabbing artificial intelligence chatbot ChatGPT, has an automated content moderation system designed to flag hateful speech, but this system treats speech differently depending on who is insulted, with anti-white, -male, and -Republican hateful speech being mostly ignored.

According to a study conducted by research scientist David Rozado, the content moderation system used in ChatGPT and other OpenAI products is designed to detect and block hate, threats, self-harm and sexual comments about minors. Rozardo fed various prompts to ChatGPT involving negative adjectives ascribed to different demographic groups based on race, sex, religion and other markers.

The researcher found out that the software favors some demographic groups over others, according to the Daily Caller. For example, the software was far more likely to flag negative comments about Democrats than Republicans, and was more likely to do the same thing with liberals and conservatives, with anti-conservative speech not being flagged. The same thing with women and men. Negative comments about men were less likely to be flagged.

“The ratings partially resemble left-leaning political orientation hierarchies of perceived vulnerability,” the researcher wrote. “That is, individuals of left-leaning political orientation are more likely to perceive some minority groups as disadvantaged and in need of preferential treatment to overcome said disadvantage.”

The negative comments about people who are disabled, homosexual, “transgender,” Asian, black or Muslim were more likely to be flagged as hateful by the Open AI content moderation system, ranking far above the groups like Christians, Mormons, thin people and others. At the bottom of the list were wealthy people, Republicans, upper-middle- and middle-class people and university graduates.

The discovery comes after the growing concern about OpenAI products, including Chat GPT, having a left-wing bias, which, as expected, favors left-leaning talking points including, in some cases, outright falsehoods. Reason even forced Chat GPT to take various political ideology tests, revealing that it is politically leftist.

In a December UnHerd article, Brian Chau, a mathematician who writes about OpenAI frequently, warned bias in artificial intelligence could leak out into important domains of government and public life as the technology is widely adopted.

“’Specific’ artificial intelligence, or paper-pushing at scale, offers a single change to cheaply rewrite the bureaucratic processes governing large corporations, state and federal government agencies, NGOs and media outlets,” Chau wrote.

“In the right hands, it can be used to eliminate political biases endemic to the hiring processes of these organizations. In the wrong hands, it may permanently catechize a particular ideology.”